When I first heard and read the essay by Laura Reiley in the NY Times, I knew I wanted to write about it because it raised important issues that go far beyond the bits and bytes I usually cover. My wife is a social worker and therapist, so I’ve seen firsthand how serious and devastating the scourge of suicide is. At the same time, I felt inadequate to address this subject alone. That’s why I reached out to my friend Bill Brenner, who has been a leading advocate for mental health awareness in the cybersecurity and broader tech community for nearly 20 years, ever since he first disclosed his own struggles. Bill generously agreed to collaborate and share his invaluable perspective. I hope to follow up this article with a video conversation featuring Bill, me, and perhaps another tech industry mental-health expert. Stay tuned.

When we first read the New York Times story describing the tragic suicide of a young woman after confiding in an AI “therapist,” it hit us hard. Her mother, Laura Reiley, painted a haunting portrait of Sophie — a 29-year-old “largely problem-free badass extrovert” who, struck by a sudden storm of emotional and hormonal distress, turned to a ChatGPT-based counselor named “Harry” instead of seeking continued human support.

Through the AI’s chat logs, discovered months after Sophie’s death, her private struggle — unseen by those who loved her — came into painful clarity. That revelation raises profound questions: What role should AI play in mental health? What are its promises — and its perils?

The Broader Context: Suicide and Prevention

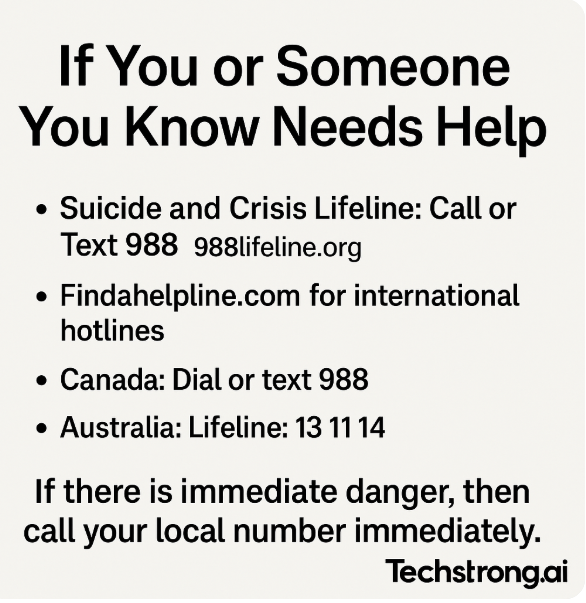

Suicide remains a pressing global crisis. The World Health Organization estimates nearly 800,000 deaths each year — one every 40 seconds. In the U.S., the CDC lists suicide among the leading causes of death, particularly rising among younger adults.

Mental-health professionals emphasize that suicidal crises are often brief and interruptible. Best practices include recognizing warning signs (e.g., talk of hopelessness, withdrawal, sudden mood shifts); asking directly and compassionately about suicidal thoughts; connecting people with professional resources or peer support; removing means of harm where possible; and consistent follow-up to sustain safety.

But not all mental-health struggles are life-threatening. Anxiety, depression, burnout, OCD, PTSD — along with everyday stressors — might not lead to suicide, but they deeply affect quality of life. These challenges, too, demand care, attention and safe, effective tools. Whether addressing crisis or chronic stress, the same questions about AI’s efficacy, ethics and integration with human care hold true.

The Promise of AI Therapy (Alan’s View)

As someone attuned to AI’s ripple across DevOps, cybersecurity and beyond, I’ve seen both its transformative promise and ethical pitfalls.

- Accessibility & anonymity. Traditional therapy can be cost-prohibitive, stigmatizing and logistically unreachable. AI offers something different: a “therapist in your pocket,” available anytime, with no shame at the door.

- Openness & honesty. Many people find talking to a machine liberating — no judgment, no awkward silences, confessing what feels too raw for human ears.

- Consistency & scale. AI doesn’t tire, get stressed, or misplace empathy — it delivers the same support at 2 a.m. or 2 p.m., scaling mental-health access in ways humans alone cannot.

That’s the appeal — and its promise.

The Human Factor (Alan’s View)

Some people assume that therapists see AI as competition. But therapists aren’t just concerned about competition. They worry about being cut out of the safety net.

Human clinicians abide by ethical codes: Confidentiality with limits, duty to report and accountability. AI has no equivalent oath — and thus no obligation.

Equally critical is the therapeutic alliance — the human bond driving therapeutic progress. Research identifies this alliance as the single largest predictor of positive outcomes — something AI cannot authentically replicate.

The Perils of Polite Machines (Alan’s View)

Sophie’s AI companion, “Harry,” said all the kind, right things: “You don’t have to face this pain alone,” “You are deeply valued.” Yet — as her mother reminded us — AI lacks real-world judgment or crisis detection and won’t escalate when someone is in danger.

There’s research showing AI’s agreeable nature can destabilize vulnerable users, reinforcing distorted thinking in a “technological folie à deux.” And studies reveal that during suicidal ideation, users overwhelmingly prefer — and benefit from — human responses, not polite machine buffers.

The Brenner Take: Closets Don’t Heal

From my own mental-health journey and reporting in cybersecurity, what struck me most about Sophie’s story is this: She treated that AI as a closet for her deepest distress.

Closets don’t heal — they suffocate. In isolation, pain metastasizes to something unbearable.

Today’s AI isn’t designed to detect silences or respond to underlying cognitive distortions. It offers coherent answers — but lacks the judgment to challenge unhealthy thoughts or escalate a crisis.

I’ve previously described AI as a “speed upgrade” to human capability — it enhances efficiency, offers consistency. But in therapy, that speed is meaningless without presence. Emotional acuity, personal accountability and willingness to act — that’s what matters.

Someday, AI may discern more effectively. But today, it risks becoming an echo chamber, normalizing pain rather than illuminating it. That isn’t care. That’s abandonment.

How Widespread is AI Therapy Becoming?

AI therapy isn’t a sci-fi future — it’s happening now, both by design and by default:

- Mainstream apps. Woebot Health reports around 1.5 million users since its 2017 launch, offering CBT-inspired support through employer or provider channels. Wysa serves around 1.3 million people across 30+ countries and has facilitated over 90 million conversations. Wysa also logged 150,000 conversations with 11,300 employees across 60 countries, showing real in-workplace reach.

- Crisis detection successes. Wysa’s AI detected 82 % of SOS-level crises among users — 1 in 20 reported crisis instances annually, although only a small fraction called helplines despite encouragement.

- User bond & engagement. Among 36,000 Woebot users, researchers found evidence of a therapeutic bond comparable to human-to-human connections—and established much faster, within just days.

- Surge during the pandemic. A 2021 survey found 22 % of Americans had used a mental health chatbot; of those, 44 % relied exclusively on the chatbot, and many began during COVID-19. Users interacted about 2.3 times per week, with 88 % likely to use again.

- Growing market size. The U.S. market for chatbot-based mental-health apps is estimated at $618 million in 2024, expected to grow over 14 % annually through 2033.

In short, AI mental-health tools are expanding rapidly — widely used in ordinary life, especially amidst stretched human care systems. They offer potential—and real risk.

A Shared Path Forward

We both believe: AI shouldn’t replace therapists — it should partner with them.

- AI as triage & support. Helping users articulate feelings, offering CBT exercises and prompting escalation when risk is detected.

- Learning from other fields. In radiology, AI spots anomalies; doctors review them. In cybersecurity, AI sifts data; analysts act. Therapy deserves no less.

- Establishing guardrails. Ethical frameworks, crisis escalation protocols, and strong privacy protections must be central to any deployment.

The Joint Take

This isn’t AI versus humans — it’s AI with humans, if designed wisely.

Sophie’s story is a stark reminder: Well-intentioned tools can harm without safeguards. AI therapy can expand access — when human oversight is present — but in isolation, it risks deepening isolation.

Whether someone is enduring suicidal despair or managing persistent stress, people deserve care that is safe, accountable and anchored in human empathy. When therapy is on the line, “move fast and break things” isn’t innovation — it’s malpractice.

AI mental health must embody augmented care, not abandoned care — tools that shine light on pain, not trap it in the closet. Because if we let machines hold secrets without checks, we invite voids no algorithm can fill.

This article was co-authored by Bill Brenner. Bill is SVP of Content Strategy at CyberRisk Alliance, a veteran cybersecurity journalist, and author of The OCD Diaries. He blends personal mental-health experience with industry insight, advocating for openness and support in cybersecurity. Together, in this article, he and Alan explore the intersection of innovation and humanity — demanding that technology serve people, not replace them.