Large Language Models (LLMs) are on the rise, driven by the popularity of ChatGPT. AI capabilities have increased significantly in recent years. Prompting techniques can improve the performance of code and art generators and generative AI tools. The number of job postings for prompt engineering jobs has grown in recent years, despite prompt engineering being a relatively new field. Several companies, including JPMorgan Chase & Co., Fidelity Investments and Bloomberg seek engineers with prompt engineering skills.

Overview

- Generative AI creates new data samples based on trained models

- It can produce various kinds of content, such as text, images, audio and synthetic data

- ChatGPT by OpenAI is one of the most popular generative AI models

- Prompt engineering is essential when working with language models like GPT

- A prompt is an initial set of instructions or input that guides the model’s output in the desired direction

- Effective prompt engineering can fine-tune the output of the model to make it more useful and reliable

- LangChain is an effective way to interface with LLMs programmatically

- Tips for writing effective prompts include thinking about your target audience, being clear and concise, providing detailed instructions, using visual aids and testing your prompts

- Effective prompt engineering increases the quality and efficiency of output generation, expands the range of applications, improves data analysis accuracy, and boosts productivity across various industries.

Generative AI

A type of machine learning known as generative AI involves creating new data samples based on trained models. This subset of artificial intelligence can produce various kinds of content, such as text, images, audio and synthetic data. A generative AI model is a subset of a deep learning model that creates new content in response to input. Machine learning algorithms focus on understanding data and making accurate predictions, while generative AI seeks to create new data samples similar to existing ones. The Generative Pre-Trained Transformer (GPT) by OpenAI is widely used in generative AI. LLMs are generally speaking statistical models of textual patterns and transformers for predicting the next word in the text. When GPT sees the word “New,” it processes the probability of the next word as follows:

- “New” car – 20%

- “New” York – 15%

- “New” Zealand – 10%

- “New” spaper – 8%

- “New” ly – 7%

Here are the probabilities for the next word if it is “York”:

- “New York” City – 50%

- “New York” Times – 20%

- “New York” Yankees – 10%

- “New York” State – 8%

- “New York” Knicks – 5%

Generally Common Definitions (Generated by ChatGPT)

Machine Learning: Machine learning is a subfield of artificial intelligence that focuses on developing algorithms and models that enable computers to learn and make predictions or decisions based on patterns in data. It involves training a model using a large dataset, allowing the model to identify and learn from patterns, and then using that trained model to make predictions or classify new, unseen data. Machine learning techniques are widely used in various domains, such as image and speech recognition, natural language processing, recommendation systems and predictive analytics.

Deep Learning: Deep learning is a subset of machine learning that focuses on training artificial neural networks with multiple layers to learn complex patterns and representations from data. It is inspired by the structure and function of the human brain and its interconnected neurons. Deep learning algorithms use hierarchical layers of artificial neurons (deep neural networks) to process and extract features from raw data. By iteratively adjusting the weights and biases of these neural networks, deep learning models can automatically learn hierarchical representations of data, allowing them to solve intricate problems such as image and speech recognition, natural language understanding, and autonomous decision-making. Deep learning has achieved remarkable breakthroughs in various domains and has been a driving force behind advancements in artificial intelligence.

Generative AI: Generative AI is a branch of artificial intelligence that focuses on creating systems or models capable of generating new and original content. These systems learn from existing data to create new examples that mimic the patterns and characteristics of the training data. Generative AI techniques are used to create realistic images, videos, music, text and even entire virtual environments. They rely on deep learning models, such as generative adversarial networks (GANs) and variational autoencoders (VAEs), to generate creative and novel outputs based on the patterns and distributions learned during training.

LLM (Large Language Model): LLM stands for Large Language Model, a type of artificial intelligence model designed to understand and generate human language. LLMs are trained on vast amounts of text data, enabling them to learn the statistical patterns and relationships in language. These models can perform various language-related tasks, including language translation, question-answering, text completion, summarization and conversation. LLMs, such as OpenAI’s GPT (Generative Pre-trained Transformer) models, have significantly advanced natural language processing and are widely used in applications that require language understanding and generation.

Both generative AI and large language models (LLMs) are types of artificial intelligence. The two are, however, different in some respects. Generative AI is a broad term that can refer to any AI system whose primary function is to generate content, while LLMs are a specific type of generative AI that work with language. When working with language models like GPT, prompt engineering is essential. A prompt is an initial set of instructions or input that guides the model’s output in the desired direction.

“Prompt engineering is the art of crafting effective input prompts to elicit the desired output from foundation models.” – Ben Lorica, Gradient Flow

Prompt engineering aims to produce specific and desired responses from the language model. The prompt can influence the model’s behavior, tone, style or even the factual accuracy of its generated text. Using this technique, the output of the model can be fine-tuned to make it more useful and reliable. If you have used ChatGPT, you already understand the simple basics of prompts.

A prompt can consist of multiple components:

- Instructions

- Typically, the model is instructed on how to use inputs and external information to produce the desired output.

- Context

- Additional context or external data that can guide the model.

- Query or Input

- Typically, a query is directly entered by the user.

- Output indicator

- The output format or type.

There is no need for all four elements for a prompt, and the format depends on the task.

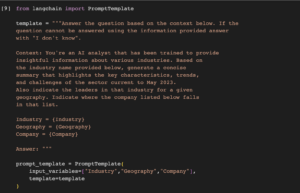

By utilizing prompt engineering techniques, we can harness the power of language models like GPT-3 and GPT-4 and effectively steer their outputs to meet our specific needs, making them valuable tools for various applications in natural language processing. LangChain, discussed in my previous article, is an effective way to interface with LLMs programmatically. With LangChain, you can create a prompt as input via a programming language.

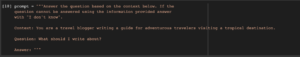

Making prompts into templates is easy with tools like LangChain. With a prompt template, you can parameterize the prompt so that inputs can be variables. The following example substitutes a parameter/variable called “query” for the question instead of putting it into the prompt.

Well-written prompts can guide users, ensure consistency and improve the user experience. The following tips will help you write effective prompts for complex tasks:

- It’s essential to think about your target audience before writing any prompts. How will your system be used? Who are they, and what are their goals? Your audience’s specific needs can help you create prompts that are tailored to them.

- Guide users through complex tasks; clear and concise prompts are essential.

- Focus on using plain language instead of technical jargon. Use simple sentences and avoid using long paragraphs.

- Detail each step in the task, including any external tools or code snippets required.

- Ensure your instructions are accurate and include all the information the user needs to succeed.

- To ensure that your prompts are effective; you need to test them.

- Get feedback from others using the system after you run through the prompts yourself. Based on feedback, make necessary adjustments to improve the overall user experience.

Mark Hinlke, CEO of Peripety Labs, recently create a guide of what he calls “Superprompts” over at his The Artificially Intelligent Enterprise website. Here’s a great example of one of his Superprompts:

Building accurate and effective generative models requires prompt engineering. Businesses and individuals in various industries benefit from prompt engineering because it increases the quality and efficiency of output generation, expands the range of applications, improves data analysis accuracy and boosts productivity.

Ultimately, generative AI presents limitless possibilities for applications in various fields. LLM advanced prompt engineering techniques enable us to customize the outputs of language models efficiently and accurately. Additionally, it can be applied to a broad range of scenarios, from creative writing to synthetic data generation and beyond. With proper guidance, LLMs could be a powerful tool capable of wielding immense potential for practical, real-world applications. We can look forward to the future as generative AI continues evolving.