Amazon Web Services (AWS) today made available a software development kit (SDK) for building artificial intelligence (AI) agents as an open source project under an Apache License 2.0.

Additionally, AWS revealed that Accenture, Anthropic, Langfuse, mem0.ai, Meta, PwC, Ragas.io, and Tavily will all contribute to the Strands Agents SDK, including support for the Anthropic application programming interface (API) and, in the future the agent-to-agent (A2A) communication framework being advanced by Google.

AWS has already been using the Strands Agents SDK to build its own agents and is now sharing that tool more broadly, says Neha Goswami, director of developer agents and experiences at AWS. Unlike other tools for building AI agents, however, the Strands Agents SDK makes it simple to invoke models to plan, chain thoughts, call tools and assess outcomes, she adds.

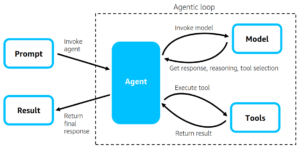

The AI agent then interacts with its model and tools in a loop until it completes the task assigned via the prompt. That enables a large language model (LLM) to plan out a series of steps, reflect on previous steps, and select one or more tools to complete the task. When the LLM selects a tool, the Strands Agents SDK takes care of executing the tool and providing the result back to the LLM. That enables an AI agent to, for example, use tools to retrieve relevant documents from a knowledge base, call APIs, run Python logic, or just simply return a static string that contains additional model instructions.

All any developer needs to be able to do is master some fundamental prompt engineering skills that will enable them to point the Strands Agents SDK at the various tools they want their AI agent to invoke, says Goswami. “You will need some basic prompt engineering knowledge,” she says.

AI agent builders can also customize how context is managed and choose where session state and memory are stored using various AI models to build multi-agent applications.

The Strands Agents SDK also includes a deployment toolkit with a set of reference implementations as well as an ability to collect agent trajectories and metrics, including distributed traces, from production agents based on open source OpenTelemetry (OTEL) software. Application developers can opt an AI agent as a monolith, where both the agentic loop and the tool execution run in the same environment, or as a set of microservices.

Strands Agents SDK also provides more than 20 pre-built examples of integrations, including tools for manipulating files, making API requests, and interacting with AWS APIs. Applications can also use any Python function as a tool using the Phoenix @tool decorator capability that is included.

Finally, application developers can also select from thousands of Model Context Protocol (MCP) servers to add additional tools.

The overall goal is to provide a higher level of abstraction for building AI agents in a few days by eliminating the need to directly invoke specific framework libraries for setting up the scaffolding and orchestration, says Goswami.

It’s not clear how many AI agents are being built but a Futurum Research report estimates they will drive up to $6 trillion in economic value by 2028. The challenge now is determining not just how to build them but also interoperate with each other to actually realize that goal.