Legitimate AI agents are valuable future users of applications, and most software organizations are not quite ready for it.

AI innovators are quickly building towards agentic AI systems acting on behalf of real customers, creating usage, making real purchases for real dollars, and integrating applications into AI-driven workflows. In the meantime, many organizations are still looking at AI agents with the “we have to block all non-human activity” lens.

AI agents are gaining new capabilities at a very rapid pace. Many AI agent developers never intended the agents to be glorified data parsers — they want them to do useful actions within real-world applications on behalf of their users.

If that’s a future you want to be ready for, you need to figure out how to provide secure, scoped access for the “good” agents — that is, those acting benevolently on behalf of real users while keeping bad agents, such as the ones looking to manipulate data, out.

From “Blocking all AI” to Thinking Deeply About Agent Experience

When generative tools first gained traction, some organizations decided to completely block all known AI tools from accessing their web properties, which seemed like the safest option at the time.

Fast forward a few years, and blocking OpenAI, Anthropic and BrowserBase is likely the last thing you would want to do. LLMs are increasingly being used by legitimate, high-value users to use your products and services.

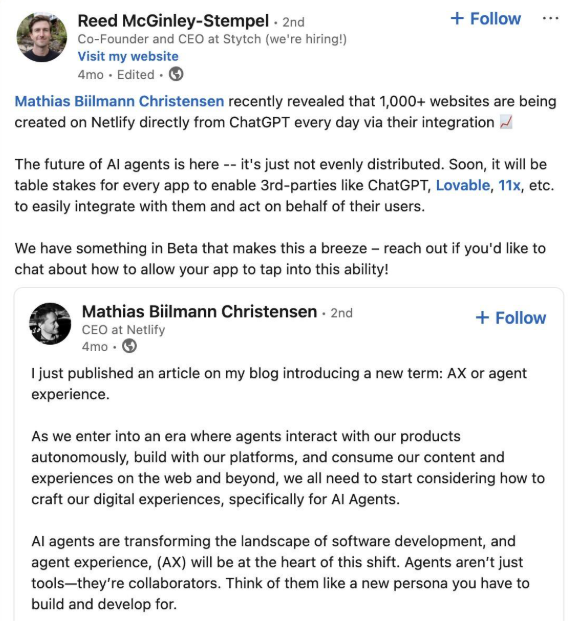

As an example, Mathias Biilmann Christensen, the CEO of Netlify, shared in a recent blog article that more than 1,000 websites are being created daily using the Netlify platform directly through their ChatGPT integration.

Within the thousands of legitimate LLM interactions with products and services are some (or perhaps many) less-desired uses. How do you prevent them? You very likely don’t want LLMs to stop interacting with your product, so completely removing LLM access is out of the question. Ideally, you want to block just the “bad” actors but leave the “good” actors in. And for your own business and applications to benefit the most from the good actors, you will want to make it easy for them to interact correctly with your systems by following agent experience (AX) principles.

Good actors won’t stay “good” by themselves, though, and it’s critically important for human users to have full control over what agents are and aren’t allowed to do using their identity. And they should be able to easily provide consent for agents or withdraw it as needed.

AI Agent Fraud: A Real Consideration

Definitely not all AI usage will be legitimate or good. Many of the bad things happening on the internet are already being accelerated by AI use, from spam to credential stuffing to cryptomining operations. While AX work will help you get the most benefit from AI agent usage of your applications, you also need to limit risk coming from malicious AI actors.

AI agents have taken the efforts to bypass traditional web restrictions to a new level: they are using real-looking browser fingerprints and human-like behavior to hide their origin. How are you supposed to tell them apart from real users so that you can limit or at least monitor unwanted behavior?

Due to the level of customization that LLM technology offers at low cost and high speed, legacy bot detection methods (CAPTCHAs, IP blocking, user-agent filtering) are no longer effective against modern AI-driven automation. Captchas can be solved by using solving networks. IP addresses constantly change. User agent valueş can be spoofed easily. AI-driven automation is sophisticated enough to be able to generate plausible variations of the above, plus gain access to reliable proxy networks to be able to break through the standard protections that may work for humans.

And bot traffic, however sophisticated, isn’t the only issue. AI agents can, of course, be intentionally used by real users to exploit systems in more sophisticated ways, and faster than ever. Most companies can’t really tell the difference between AI agents and human users in their applications today and therefore can’t reliably block unwanted agent visits or actions. They also can’t always distinguish a legitimate and well-intentioned AI agent user from a user that’s trying to use an agent to exploit the system or use it in unexpected ways.

If you are offering a resource-intensive product experience (for example, if you are a cloud provider, a continuous integration provider, or a service that renders video assets), you are likely already experiencing the impact of this and have to constantly play a cat-and-mouse game against AI-driven attackers looking to take advantage of expensive compute resources for free.

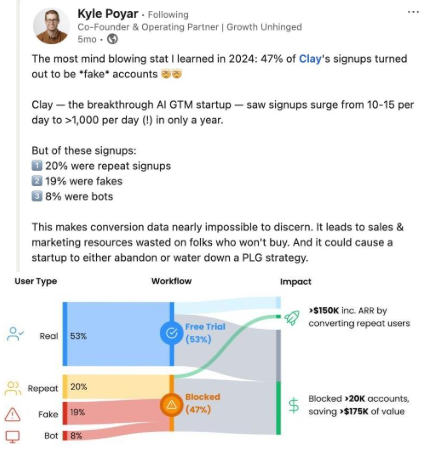

Alt: In one study, 47% of service signups turned out to be fake accounts.

Caption: Clay is one of the companies offering a resource-intensive product — and it had to block more than 20,000 accounts from being created due to repeat signups, fake users, or bot activity.

Strong Authentication and Permissions are Key for Good AX (and UX)

So how do you offer the best possible agent experience while also reducing the possibility of fraud to a minimum?

Our prediction for the next six to 12 months and beyond is as follows: The engineering effort required to implement agentic functionality is no longer the blocker as it once was, as effort required has declined significantly with the adoption of MCP. However, we will only collectively start seeing real value from the agentic behavior of AI tools once organizations trust them enough to let them take valuable actions on users’ behalf. And engineering teams are right to be very cautious with providing such access, as any kind of malicious action can result in a much higher impact than would be possible without AI scale involved.

Trusted AI agents currently have well-known origins, and as we wrote previously, you may want to take advantage of that info to start identifying and monitoring the known good actors; but this kind of origin information isn’t granular or stable enough to be able to fully trust agentic actions.

We believe that organizations will only open up the really valuable parts of their applications to agents once they see that mature tested methods for securing access for users (such as OAuth) can be used to solve strong authentication and enforced permissions for agents as well. From the application developer’s perspective, your app needs to be an OAuth 2.0 and OpenID Connect-compliant identity provider to make this happen.

To Facilitate Stellar AI Agent Experience, Your App Needs to be an Identity Provider

Earlier this year, we showed several examples of companies like Supabase building OAuth

2.0 and OpenID Connect functionality to facilitate agent access.

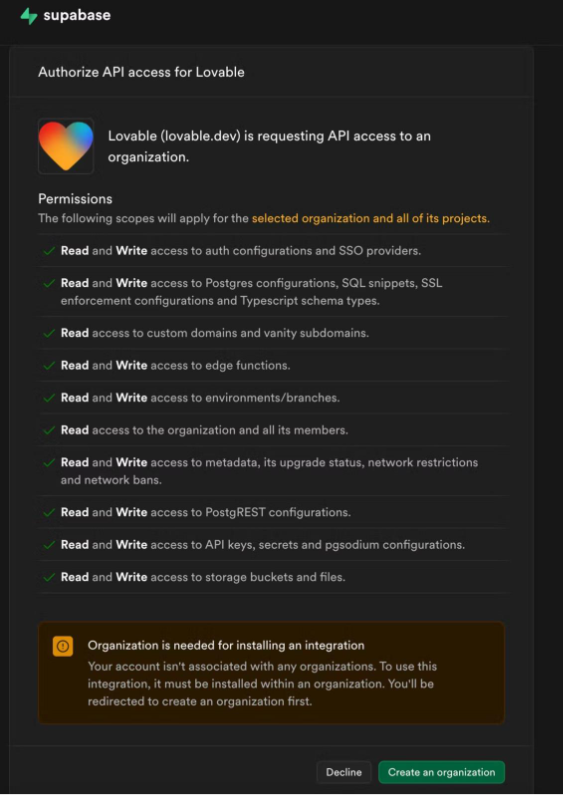

Alt/Caption: An example of a custom permission dialog with granular permissions from Supabase.

In this example, Supabase, a leading open-source Firebase alternative and a much-loved datastore provider, lets the AI-powered development platform Lovable get very granular access to specific actions that can be done in the Supabase platform.

Because Supabase acts as their own identity provider, Lovable can provide something much more powerful than a standard “Allow or disallow access” flow: it enables developers to build powerful integrations on top of the Supabase platform while being transparent with the end user on the exact actions an agentic integration, like Lovable in this case, may take on the user’s behalf.

Downstream, Lovable can take advantage of the access to not only suggest database changes, but to actually write them with the user’s approval. Such deep integration happens so closely to the core of the Supabase and Lovable products that it would never be possible without an identity model that’s closely aligned to how these two applications work together.

From what we see, the “wow” experiences in the agentic AI world will happen when there is a finely designed and implemented agentic permissions model keeping users’ consent safe in the background. And such “wow” experiences are the ones that tend to stick with the end users.

Of note: the first deep integrations, such as the above Lovable example, were a proof of concept for agent use, and they required a significant engineering undertaking to build support for bespoke single-agentic use cases. With the adoption of MCP, however, the effort needed to implement such functionality has decreased. Many applications and identity platforms are releasing features that make the process of securing MCP access straightforward.

In the Long Run: AI Agent Authentication and Permissions

In the long run, enterprises need to invest in AI agent authentication and a solid permission model if they are looking to get value from AI agents using their applications on behalf of real users.

Organizations will increasingly need to let agents authenticate themselves and provide both organization-level permissions that admins can control, and also consent workflows and UI for users to grant specific permissions to agents over the exact actions that agents may take.

Given this direction, our recommendation to all software organizations is to seriously consider investing in agent authentication infrastructure, and ultimately to become their own identity provider for both AI and non-AI users.

In addition to better authentication and authorization, we recommend planning for an investment in detection, management and mitigation of unauthorized agent activity.