As the global transition to renewable energy accelerates, solar power is rapidly becoming a foundational element of modern energy infrastructure. However, managing large-scale photovoltaic (PV) systems remains a significant challenge, particularly due to the complexity and volume of sensor data outpacing traditional human-centered diagnostics approaches.

In most solar operations today, operators rely on static dashboards, rules-based alarms and periodic maintenance checks to detect underperformance or failures. This reactive model is not only inefficient but also vulnerable to late-stage fault discovery, energy loss and costly downtime.

This article proposes a future-ready architecture for zero-touch solar diagnostics — a fully autonomous, artificial intelligence (AI)-driven pipeline that leverages Databricks, the OSIsoft PI System and AWS SageMaker. The goal is to transform solar diagnostics from a manual, labor-intensive task into a self-governing, intelligent process that can scale seamlessly with fleet size and system complexity.

The Challenge: Too Much Data, Too Little Intelligence

Modern solar farms are equipped with thousands of sensors measuring parameters such as irradiance, string voltage, module temperature and inverter frequency. These sensors generate vast telemetry, often gigabytes per site per day, stored in historical databases such as the OSIsoft PI System.

Despite the abundance of data, current diagnostics workflows typically suffer from:

- Delayed detection of anomalies

- High false-positive alarm rates

- Manual root-cause analysis

- Inconsistent reporting across sites

- Underutilization of historical data trends

Operators are often overwhelmed by alerts or forced to make judgment calls based on fragmented data. The cost? Missed faults, reduced performance ratio (PR) and higher O&M overhead.

The Vision: Zero-Touch Diagnostics

Zero-touch diagnostics refers to a system that can autonomously detect faults, explain them, document them and notify stakeholders, all without human intervention. Such a pipeline should be able to:

- Stream sensor data in near real-time

- Engineer meaningful features from raw values

- Apply AI models to classify fault types and severities

- Generate human-readable root cause analysis (RCA) reports

- Trigger alerts and logging automatically

- Adapt its behavior based on performance feedback

By uniting modern data platforms and machine learning tools, this vision becomes technically achievable and operationally valuable.

Step 1: Ingesting and Engineering Data With PI and Databricks

The architecture begins with continuous data streaming from the OSIsoft PI System to Databricks Delta Lakes. A Databricks Spark pipeline extracts and processes historical and real-time data across the fleet.

Key engineered features include:

- Voltage Skew: Measures imbalance among strings under similar irradiance

- Thermal Ramp Rate: Detects abnormal heating patterns in inverters or modules

- Clipping Residuals: Identify power capping due to system design or inverter limitations

- Reactive Power Oscillations: Capture instability in output power during grid fluctuations

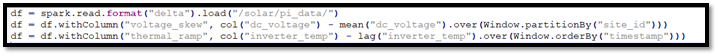

Example Spark Transformation:

This step ensures that the system analyzes diagnostically relevant metrics, not just raw values.

Step 2: Fault Detection and Explanation With SageMaker

After feature extraction, the dataset is passed into a modeling layer built using AWS SageMaker. The proposed architecture supports:

- CatBoost Classifiers: Used for fault type prediction (e.g., PID, inverter self-trip, shading anomaly)

- Huber Regressors: Estimate severity levels or expected energy loss

- SHAP Integration: Offers transparent interpretability by showing which features influenced each prediction

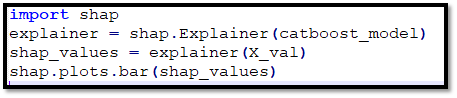

Example SHAP-based explainability snippet:

This transparency is crucial in high-stakes energy environments where model trust is non-negotiable.

Step 3: Autonomous RCA Generation and Alerting

One of the most innovative components of the zero-touch pipeline is the automated RCA engine.

For every detected anomaly, the system can auto-generate a PDF or dashboard artifact including:

- Timestamp and location of the fault

- SHAP-based feature explanation

- Historical comparison with similar events

- Estimated energy impact

- Suggested next steps (e.g., clean module, replace string, adjust inverter)

Alerts can then be dispatched via Slack, Microsoft Teams or AWS SNS, while all events are logged securely using Azure Table Storage or DynamoDB.

Step 4: Continuous Learning & Feedback Loop

Unlike traditional static models, the proposed system supports adaptive learning.

Key components are:

- Drift detection algorithms monitor performance metrics

- Confirmed fault feedback (if provided) is ingested as labeled training data

- Models are retrained periodically using SageMaker Pipelines + MLflow

- Model versions and metrics are tracked for reproducibility and rollback

This closed-loop intelligence ensures that the system becomes more precise over time and adapts to new equipment behavior, environmental patterns or solar technology upgrades.

Step 5: Security, Governance and Compliance

Given the critical nature of solar power infrastructure, security and governance are embedded into the pipeline:

- Role-Based Access Control (RBAC) with Azure AD or AWS IAM

- All model predictions and alerts are timestamped and audit-logged

- Encryption at rest and in transit for all telemetry and reports

- System supports compliance with NERC CIP, SOC 2 and ISO 27001 frameworks

The result is not just intelligence it’s secure intelligence.

Potential Benefits (Upon Deployment)

While the full system is conceptual at this stage, simulated testing and architectural modeling suggest substantial benefits upon implementation such as:

- Up to 80% reduction in manual fault triaging

- Faster detection cutting anomaly response time from hours to minutes

- Near elimination of false-positive alarms

- Generation of standardized RCA reports for regulatory audits

- Seamless scaling across hundreds of geographically dispersed solar sites

These gains address both cost efficiency and operational reliability, which are core drivers in the renewable energy sector.

Future Directions

Next-generation enhancements may include:

- Multi-Agent Reinforcement Learning (MARL) for site-level optimization

- Integration with CMMS systems for automated work order creation

- Weather-aware model tuning to adjust thresholds dynamically

- Digital twin simulation for pre-validating model interventions

As models become smarter and cloud-native infrastructure matures, zero-touch diagnostics may evolve into zero-intervention solar autonomy.

Conclusion

In a solar-powered future, intelligence isn’t a bonus; it’s an imperative. With solar fleets expanding in size, diversity and criticality, diagnostics must keep pace. A zero-touch, AI-powered pipeline is not just a conceptual leap; it’s an operational necessity.

By architecting an explainable, adaptive, secure and fully autonomous diagnostics pipeline, we pave the way for solar systems that don’t just generate energy — they protect, heal and optimize themselves.

The sun may power the grid. But AI will keep it running.