Anthropic has just dropped Claude 4.0 Opus and Sonnet, featuring impressive code generation capabilities. If you’re a developer building an artificial intelligence (AI) solution, or you’ve already built one, should you switch to this latest model?

That’s the question almost all developers are grappling with in this fast-paced, evolving AI landscape. The answer, of course, depends on your specific workload. For retrieval-augmented generation (RAG)-based chatbots or knowledge assistants or AI agents, the content quality and accuracy of user question representation determine whether model upgrades will improve performance.

Overwhelmed By Too Many LLM Choices?

How do you pick the best LLM for your use case? What about the embedding model for the content? Does the chunk size of your content matter? Model temperature? Which cloud you’re using or on-premises? Each decision impacts performance, yet testing every combination would take forever.

A typical RAG-based solution can have over 100,000 configuration permutations, each with a different cost versus quality profile. This is where most teams flounder.

Recent research tackles this optimization with considerably less time and evaluation resources. The paper, ‘Faster, Cheaper, Better: Multi-Objective Hyperparameter Optimization for LLM and RAG Systems’, describes a Bayesian optimization technique that finds the best balance between variables like safety, alignment, cost, carbon and latency. This technique enables developers to work with a smaller sample set and rapidly identify the optimal configuration.

Where is the Pareto Frontier and Can I Book an Expedition There?

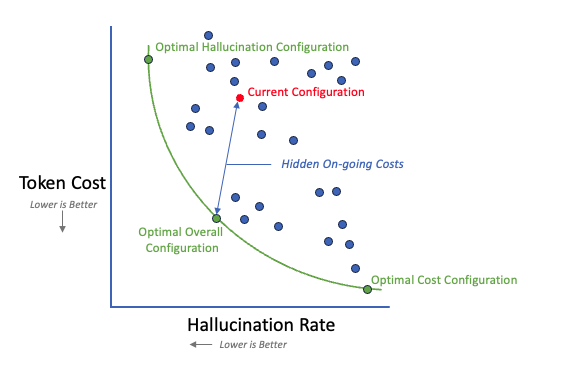

For those with mathematics skills, the paper goes into detail on how this balancing act works so quickly. For example, let’s say you want to minimize both hallucination rates and token costs. These two goals usually pull in opposite directions.

Look at the graph illustrated below. The Y-axis displays the cost and the X-axis shows the hallucination rate. The Pareto Frontier is an L-shaped curve, where all the different configurations are points on or above and to the right of the curve. The optimal configuration sits on the spot closest to the origin (which theoretically represents zero hallucinations and zero cost).

Now imagine optimizing for more than two dimensions (such as faithfulness, tone, toxicity, cost and latency), with some level of weighted importance that you’ve assigned to each.

Your HR bot might need specific compliance requirements. Data leakage is not an option. Meanwhile, your product assistant might want to focus on reducing cost while maintaining answer relevancy.

How Are You Choosing Today?

Most development teams stick with whatever model they tried for the first time, or test a few models periodically. This means you are likely positioned at a relatively random point above the Pareto Frontier curve in the graph, without knowing how close or far you are from the optimal point. That difference manifests itself as unrealized and ongoing token costs and quality response issues. These hidden costs impact the ROI of your AI solution and increase your risk profile.

Even if you’re close to optimization today, the fast pace of model improvements and changing query trends can gradually shift you away from that point. Your perfect setup today becomes outdated tomorrow. Re-evaluating new configurations and workload shifts become part of your regular continuous improvement process.

Smart optimization at build time, combined with ongoing monitoring, keeps your AI solutions performing where they should throughout their lifetime.

Related Research

This builds on leading research in AI security, focusing on response safety, alignment with industry and corporate policies and deeper evaluation of cost and carbon footprint metrics beyond simple rule-based checks or LLM-as-a-judge approaches. These advanced metrics allow for fine-tuned priorities and help tailor responses to different demographics and the personalization of the users.

Another interesting area is the relationship between these advanced metrics and risk severity, where severity can have a dynamic cost potential. If this relationship can be reliably identified, then real-time decisions can stop high–risk responses before they occur.

The Bottom Line

As the AI model space continues to advance, verifying trust and security becomes increasingly important. Traditional cybersecurity tends to focus on ‘outside in’ threats, while AI systems carry a different kind of risk: They generate their own. Balancing optimization with security is the responsibility of AI solution developers, compliance officers and chief information security officers (CISOs) in the organization.