When people talk about conversational artificial intelligence (AI), the spotlight often lands on convenience. We first think of things like scheduling, voice-based home assistants, and recently, AI customer support. But beneath this high visibility use cases lie deeper, transformative potential.

For individuals who have difficulty of hearing, everyday communication presents numerous barriers: From missing out on in-person conversations to struggling with poor audio quality in virtual meetings. Hearing aids and closed-captioning systems have long been part of the solution, but today’s AI technologies are unlocking a new level of intelligent support.

Driven by breakthroughs in technologies like natural language processing (NLP), edge computing and transformer-based speech models, conversational AI is enabling hearing-impaired users to not only understand spoken language more accurately in real time, but at the same time, interact with the world more confidently.

Let’s investigate the technical innovations powering this shift, the current challenges, and the future of inclusive voice-first experiences.

Understanding the Challenges Faced by Hearing-Impaired Users

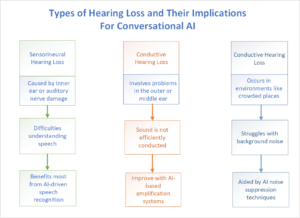

Hearing loss is rarely one same condition for individuals suffering from it. There are different types of hearing losses which exist across the spectrum. Some examples are sensorineural hearing loss which affects the inner ear or auditory nerve; conductive hearing loss where sound can’t travel efficiently through the outer or middle ear; and situational hearing challenges, such as noisy environments that even those with normal hearing struggle with.

Traditional hearing aids amplify sounds uniformly. But amplification without intelligent processing can result in overwhelming background noise or unclear speech — particularly in dynamic acoustic environments like busy streets or echo-filled rooms.

Another barrier is real-time communication. Even when video calls include closed captions, those captions are often delayed, inaccurate or generic, lacking the nuance or context needed for meaningful interaction. This is where conversational AI can fill the gap.

How Conversational AI Enhances Accessibility

Conversational AI tools today are closing the accessibility gap in transformative ways. Real-time transcription enables users to read spoken language almost without any delay. These systems leverage advanced transformer models to enhance the accuracy and context-awareness of transcription.

Contextual captioning goes a step further. Unlike generic subtitles, AI can interpret intent and adjust dynamically for regional accents, slang and rapid speech patterns. This leads to a more natural and intuitive communication experience.

Also to add one more thing, modern hearing aids now integrate directly with AI systems via Bluetooth. This integration allows them to access advanced features such as scene detection and adaptive directional microphones. In some devices, onboard AI chips process environmental audio in real time, tailoring sound amplification and filtering to match the user’s context.

Technical Innovations Driving This Shift

Multiple innovations have helped us to achieve these capabilities. Transformer-based speech models like Whisper, Wav2Vec 2.0 and Conformer offer high transcription accuracy by analyzing speech in context and accounting for long-range dependencies.

Edge AI is another critical development. It allows for low-latency processing directly on the device, minimizing privacy concerns and improving response times. This is especially important for wearables and hearing aids with limited computational resources.

And then there is deep learning which also helps with beamforming and noise suppression techniques. These allow AI systems to isolate the speaker’s voice from the background noise, dramatically improving clarity and recognition. Finally, multimodal interaction combining voice, text and visuals supports users who benefit from more than one mode of communication. This is essential in making the technology universally accessible.

Case Studies and Applications

Google Live Transcribe utilizes neural networks to provide real-time, on-device captioning across multiple languages. It is mainly effective when paired with Android devices and Bluetooth enabled hearing aids.

Oticon More represents a leap forward in hearing aid technology. Its deep neural network has been trained on 12 million real-life sound scenes, enabling it to prioritize relevant sounds and suppress irrelevant ones.

Microsoft Azure Cognitive Services deliver real-time transcription and translation APIs, which are increasingly being embedded into platforms for accessible customer service and remote collaboration.

Limitations and Open Challenges

Despite rapid progress, challenges remain. Language diversity continues to test the limits of AI models. Dialects, accents and multilingual conversations can reduce recognition accuracy.

Latency is another concern. Edge devices, especially those as compact as hearing aids, require models that are both accurate and extremely efficient. Optimization for power and processing constraints remains an active area of research.

Privacy is perhaps the most complex challenge. Ensuring that conversational AI respects user consent while offering continuous listening functionality is a delicate balance.

The Future of Inclusive Conversational Interfaces

The next wave of advancement will be driven by personalization, multimodal feedback and ethical design principles. Personalized speech models could learn from a user’s individual hearing profile, adapting in real time to noise conditions or preferred interaction styles.

AI companions could become integral to daily life offering reminders, monitoring health conditions and even providing emotional support through natural conversation.

Finally, standardized accessibility frameworks will play a key role in ensuring inclusive development practices. By aligning guidelines such as the Web Content Accessibility Guidelines (WCAG), developers can ensure their solutions serve diverse user needs from the start.

Conclusion

Conversational AI is no longer just a convenience feature, but now it’s becoming a core component of digital accessibility. From real-time captioning to AI-driven hearing enhancement, the potential for empowering the hearing-impaired is enormous. As engineers, product designers and researchers, the responsibility to build inclusive systems is not just technical; it’s ethical.

The future of accessibility lies in collaboration between technologists and the communities they serve. With conversational AI, we have the tools to make that future a reality.