SSD, the Unsung Hero of AI

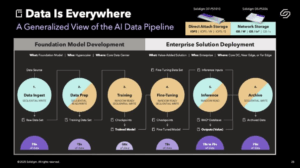

Chat-based AI, like ChatGPT, is a powerful tool—like having a very knowledgeable friend who always has the answers to general questions. The magic really happens when you add custom and private information to the AI, then you have access to great general knowledge mixed with very specific knowledge of your business. The process of fine-tuning a foundation model, or building Retrieval Augmented Generation (RAG), allows your data to be added to a general-purpose AI model. In both cases, a large amount of your data will feed into the AI and be on the path to best business value from AI; high-capacity and high-performance storage that is power efficient is extremely valuable. The SSD storage holding data for your AI is that unsung hero.

AI Inference Data Tiering

Exponential growth is always a challenge, particularly the exponential growth of AI model size is leading to challenges with GPU memory. The GPU or GPUs working with an AI model need to hold that model in memory for fast calculations. What do you do when the model is too large for the GU memory? You can buy more or larger GPUs, but that gets expensive, particularly if you don’t need the additional compute performance. Solidigm’s partner Metrum AI demonstrated the ability to use a fast SSD as a tier for storing parts of large models not currently in use by the GPU, swapping model data in and out of the GPU RAM is needed. Decoupling the memory and compute resources for AI opens new options for AI inference, making more use-cases cost-effective.

Massive SSD on Your Network

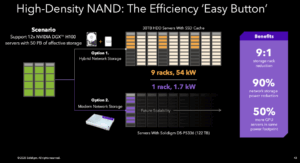

The most common specification for an SSD is its size; the Solidigm D5-P5336 measures a massive 122 TB in capacity and is 2.5 inches wide in U.2 form factor. Another measure of an SSD is its price, which is around $14,000 for this NVMe behemoth. The drive has been available since late 2024 and is already being shipped to customers inside Dell’s PowerScale storage system, giving each PowerScale F910 a raw capacity of 2.9 PB and a scaled-out cluster 737 PB. With this huge SSD, the data centre footprint required to deliver tens of petabytes of data for an AI training cluster shrinks from nine racks to one rack, and the power demand drops by 90% compared to a hybrid storage system that combines hard disks with an SSD cache. The power savings alone can enable the addition of more GPUs for AI in existing data centers, where power delivery is often the limiting factor for compute performance. Capacity is not the only special feature; these modern SSDs also have extreme endurance. The days of worrying about SSD wear and the number of drive writes per day are past, when you can send small random writes to a drive all day every day for five years without wearing the drive out. The endurance doesn’t come from low performance; the drive has impressive throughput and latency specifications. Extreme endurance comes from having a lot of flash under the controller, providing more options to manage individual NAND cell life.

SSD Closer to Your GPU

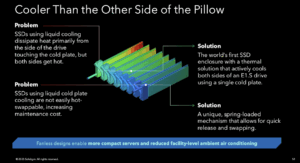

AI is also a driver for direct-attached storage, particularly the integration of swappable storage into a water-cooled NVIDIA DGX server and GPU enclosure. NVIDIA asked Solidigm to redesign the section that houses eight SSDs, eliminating the cooling fans by using liquid cooling in place of airflow. Existing small form factor SSDs use cooling fins on both sides of the drive; putting liquid cooling on both sides would prevent the drives from being swapped out. Solidigm designed a custom drive with internal heat transfer capabilities, allowing for cooling from one side, and combined this with a spring-loaded mechanism to secure each drive to a liquid cooling plate within the enclosure. The fans are gone, and the eight liquid-cooled drives fit in the same space as the older air-cooled drives without sacrificing hot-swap maintenance.

Solidigm continues to demonstrate innovation in SSD solution design, meeting the needs of very demanding AI use cases. With support for multiple form factors, from U.2 and M.2 through to various extended dense (EDSFF, E1S, E3S) shapes that are designed just of SSDs, Solidigm also supports different cost, capacity, and performance combinations to suit the different requirements through an AI pipeline, and in non-AI use cases.

Solidigm presented these innovations, among others, at AI Infrastructure Field Day. You can view all their presentations on this page of the Tech Field Day website.