In the AI competition, Elastic is setting a course of its own with a steady addition of new AI product capabilities. The company’s latest AI advancements include vector search that powers Elasticsearch, its flagship search and analytics engine built on Apache Lucene.

ElasticSearch has a full vector database running under the hood that is designed to tabulate all kinds of unstructured data and make it searchable for users.

Director of DevRel and developer community, Philipp Krenn, said, “We are an AppDev company expanding into more interesting use cases outside of AppDev that is based on the heritage of search,” while talking about Elastic’s foray into vector search, an initiative that began in 2020.

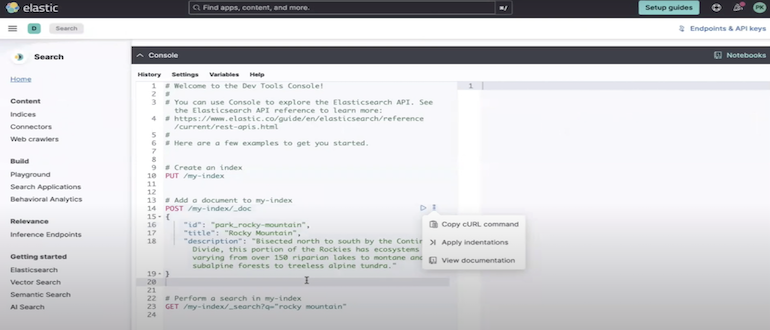

At the AI Field Day event in California, the search AI company gave a walkthrough of the platform’s augmented retrieval capabilities that include a new ANN algorithm – Hierarchical Navigable Small World (HNSW), that it says, will help enhance the responses of LLMs.

“We are focused on fast search results and HNSW is a natural fit for that.”

Large language models (LLMs) underscore the importance of fast retrievals and similarity search query functions for better responses. Vector database combines many of these capabilities making millisecond queries possible.

In addition to recording unstructured and semi-structured datasets in diverse formats – text, document, image, video and audio – a vector database also offers many advanced data management capabilities that include finding meaningful connections between two datapoints.

Within a vector database, data is stored as numerical representations of data objects, known as vectors or vector embeddings. The database indexes these vectors and with the help of algorithms, lets users query broad sets of data and get fast results. The fast query speed enables enterprises to perform analytics and analyze complex information easily.

“Traditional databases are very much black and white,” explained Krenn. “You define the condition, and everything comes back in that condition. They struggle to combine all the aspects and have relevance.”

The reason vector databases are preferred for AI is because they can capture similarities between two pieces of data that exist beyond the surface level. Leveraging this similarity search, aka. approximate nearest neighbor (ANN) search, LLMs can accelerate request processing and deliver complex analyses in a fraction of the time.

Elasticsearch’s built-in vector database enables developers to leverage the platform’s advanced retrieval capabilities to add context and relevance to search results, the company says.

The Hierarchical Navigable Small World algorithm was added with Elasticsearch 8.0 two years back. HNSW powers the platform’s vector database ANN.

The HNSW graph indexes vectors based on their similarity, boosting search performance across datasets.

“Basically, it’s like an approximation to get closer to the result. You go through multiple layers, each layer adding more datapoints to get you as close as possible to where you want to go, rather than comparing all of the documents. This makes it much faster and computationally feasible to give you results in a reasonable time without doing an exhaustive search.”

Elasticsearch supports dense vectors with flexibility for each dense_vector field to have its own HNSW graph in the schema. This allows users to easily deploy multiple models with their own sets of unique specialties.

When text content is too long and chunking is required to break them down into multiple embeddings, the dense_vector fields can be multi-valued to accommodate all the chunks within a single document.

In 2024, Elastic added vector compression algorithms to reduce the memory requirement for vector data.

“This is the strength that Elasticsearch gives you. The ability to combine all of the different types of data and work with them to make your search more powerful and expressive. That is the core of why this search engine is so specific and different.”

AI analysis and pattern identification use cases are on the rise across enterprises. Futurum Intelligence data shows that it is one of the highest ROI-generating use cases in enterprises, raking up $14.6B in revenue. Vector search can help push AI search use cases further, enabling users to search data more efficiently, and accelerate model response time.

Be sure to catch Elastic’s presentations from AI Field Day at TechFieldDay.com, or read about the Elastic vector database at Elastic.co.