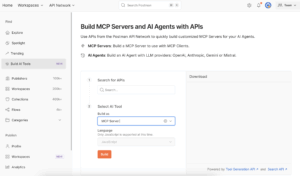

Postman has added an AI Agent Builder tool that enables application developers to create Model Context Protocol (MCP) servers that can also receive and send requests via APIs.

Originally developed by Anthropic, MCP is based on a client-server architecture that makes use of JSON-based remote procedure calls (RPCs) to enable AI agents to invoke functions, fetch data and use predefined prompts. That capability eliminates the need to build connectors for each AI agent that an organization might deploy.

Postman CEO Abhinav Asthana said AI Agent Builder makes it possible for application developers to use familiar API constructs and tooling to build an MCP server without having to invoke custom scripts.

Application developers will be able to select MCP much like an HTTP request to connect to any MCP server. From there they can explore its capabilities and, if needed, debug it using visual tools provided by Postman.

Tools, prompts and resources are automatically loaded from the server and displayed in a structured interface. Developers can also opt to paste an MCP config JSON file created using tools such as Claude or VS Code into the URL bar to generate a working request instantly.

It’s not clear how quickly AI agents are being developed and deployed, but Futurum Group research projects that the rise of AI agents is expected to drive up to $6 trillion in economic value by 2028, with 89% of CIOs now identifying agentic AI as a strategic priority.

Ultimately, AI agents are expected to become a primary interface for access functions within applications. The challenge is that automating any workflow will require multiple AI agents to interoperate with one another. Postman is making a case for using the same APIs employed today to integrate applications to also enable AI agents to communicate not only with each other but also multiple applications.

The level of autonomy that each AI agent will have will naturally vary depending on what type of large language model (LLM) is being used to drive it. More advanced LLMs provide more robust reasoning capabilities but the cost of using them tends to be higher than previous generations of LLMs.

Regardless of approach, MCP is emerging as a de facto standard for integrating AI agents and applications. The challenge now is determining to what degree it might make more financial sense to deploy an LLM that is going to be frequently invoked in an on-premises IT environment compared to relying on a cloud service where each input and output token created increases costs. The one thing that is certain is AI agents will be consuming a large amount of token credits, an issue that might be avoided if an organization opts to deploy an LLM themselves.

One way or another, AI agents will soon be pervasively employed across enterprise IT environments. Depending, as always, on the actual level of return on investment (ROI) that is achieved and maintained, the issue now given all the possibilities is identifying which ones to build and deploy first.