Amazon Web Services (AWS) today made generally available a serverless computing instance of its cloud services that are optimized specifically for deploying and managing artificial intelligence (AI) agents.

The Amazon Bedrock AgentCore service combines multiple technologies for deploying software to enable AI agent deployment via a single click, says Madhu Parthasarathy, general manager for AWS AgentCore.

The overall goal is to make it much simpler for organizations to deploy and manage AI agents faster in production environments running on the AWS cloud, he says.

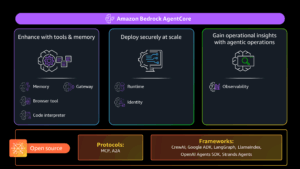

Designed to be compatible with multiple AI frameworks, including CrewAI, Google ADK, LangGraph, LlamaIndex, OpenAI Agents SDK, and Strands Agents, the Amazon Bedrock AgentCore can be used to invoke any Bedrock AI model or third-party models residing elsewhere, including OpenAI and Gemini from with an integrated development environment (IDE) or various vibecoding tools.

At the core of the Amazon Bedrock AgentCore service is an AgentCore Code Interpreter that enables agents to generate and execute code in isolated environments, an AgentCore Browser that allows agents to interact with web applications, and AgentCore Runtime that makes it possible to scale infrastructure resources up and down as needed by an AI agent.

AgentCore Gateway then makes it possible to transform existing application programming interfaces (APIs) and AWS Lambda functions into agent-compatible tools that can invoke data via a Model Context Protocol (MCP) server or third-party connectors. Additionally, AgentCore supports the agent-to-agent (A2A) protocol to facilitate communication between AI agents.

Other components include AgentCore Memory to help developers create context-aware AI agents capable of understanding user preferences, historical interactions, and similar relevant context; AgentCore Identity to ensure agents securely operate with proper authentication and authorization using OAuth standards; and a set of AgentCore Observability dashboards to track every agent action, debug issues and generate reports using OpenTelemetry instrumentation tools and a set of governance and security tools.

Collectively, these primitives make it possible for AWS to provide access to backend infrastructure services at a higher level of abstraction that ultimately reduces the total cost of deploying AI agents at scale, says Parthasarathy. “We do all the heavy lifting for each workload,” he says.

Initially previewed earlier this year, the AgentCore SDK has already been downloaded over a million times, with Clearwater Analytics (CWAN), Cohere Health, Cox Automotive, Druva, Ericsson, Experian, Heroku, National Australia Bank, Sony and Thomson Reuters all being early adopters.

It’s not clear to what degree AI agents might soon drive more organizations to embrace serverless computing frameworks, but as the amount of data accessed by AI agents increases the need for greater infrastructure flexibility is becoming more apparent. In fact, a survey published by G2 finds that well over 57% of organizations have AI agents in production, with 40% having allocated more than $1 million for AI agent initiatives. More than half of organizations, meanwhile, said they are highly likely to expand their agent budgets over the next 12 months. The Futurum Group, meanwhile, projects AI agents will drive up to $6 trillion in economic value by 2028.

Given that level of adoption, arguably the only issue left to be resolved is determining where best to deploy those AI agents depending upon how much data is being accessed, where, when and how.