Amazon Web Services (AWS) this week made generally available Automated Reasoning checks, a set of guardrail policies for generative artificial intelligence (AI) models, available on the Amazon Bedrock cloud service that validate the accuracy of the output created against a specific set of verified domain knowledge.

Byron Cook, vice president and distinguished engineer at AWS, said Amazon Bedrock Guardrails make use of mathematical logic and formal verification techniques to validate the accuracy of the output created by a generative AI model. That’s critical because generative AI models are probabilistic in the sense that they do not create the same output the same way every time, which can also lead them to create hallucinations that are completely inaccurate, he added.

Amazon Bedrock Guardrails is making use of an alternative set of AI technologies to validate the output of an AI model based on a set of domain knowledge that data science teams accept as a source of truth. That approach then prevents inaccurate outputs from being infused into workflows that are deterministic in the sense they need to be consistently performed the same way each time such as, for example, processing of transactions.

Additionally, Amazon Bedrock Guardrails evaluates the prompts being used for ambiguity to ensure that the output being provided by a generative AI model aligns with the actual intent of the end user, noted Cook. That latter capability is especially crucial when asking a generative AI model to generate code based on prompts that could be easily misinterpreted, he added.

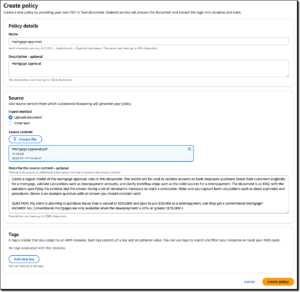

Data science teams that want to invoke Amazon Bedrock Guardrails will need to encode rules in a policy document written in natural language. Large documents in a single build with up to 80,000 tokens can be supported.

The challenge, of course, then becomes establishing a verified domain knowledge that can be used to verify the output of a generative AI model. While many larger organizations may have defined a set of domain knowledge bases, many, if not all, will either need to update the data used to create them or, more likely, build many more from scratch.

On the plus side, usage of AI in mission-critical applications should steadily increase as organizations take advantage of mathematical logic and formal verification techniques to validate outputs that can then be relied on to more reliably automate workflows.

In the meantime, there are plenty of instances where probabilistic output can be used to streamline workflows. The degree to which those outputs will increase productivity is going to naturally vary, but in the absence of a way to reliably validate an output generated by an AI model, the most prudent thing to do is to not take anything for granted. Unless there is a set of guardrail policies that have been thoroughly vetted, each organization will need to rely on humans to ensure the integrity of a business process is being maintained. The simple fact is, after all, that the responsibility for the ultimate outcome of those workflows, no matter how much AI was used, still belongs to the humans tasked with supervising them.