In late April, I was a delegate for AI Infrastructure Day 2. Google Cloud presented on the first day, and the delegates gathered at Google’s Moffett Park campus for a day’s worth of presentations on the Google Cloud portfolio.

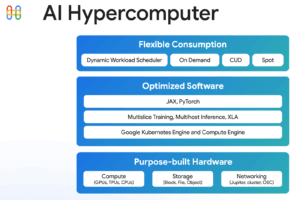

Many of the individual features were impressive, but the pure breadth of the offering caught my attention. Google Cloud is certainly not a one-size-fits all, with a range of services from full hosting to a la carte specialty services. This range of services is termed AI Hypercomputer.

AI Hypercomputer can dial up GPU acceleration and Tensor acceleration for a variety of learning and inference workloads. There are several storage options to suit your budget and workload needs. Network configurations connect protected domains in a range of ways.

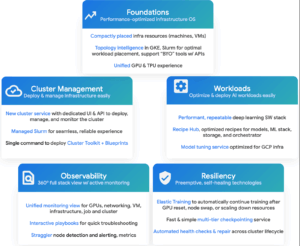

On top of the infrastructure goodies, Google Cloud manages many different aspects of your environment. From the rich Cluster Toolkit to Storage Intelligence, and improvements to GKE, overseeing your cloud system is as easy as building it.

AI Infrastructure Resources in the AI Hypercomputer

AI Hypercomputer offers a number of systems with the typical range of CPU and memory. AI systems include advancements on older cloud-provisioned systems. A3 Ultra is newly released with Intel Emerald Rapid CPUs, up to 3TB of memory and NVIDIA H200 GPUs. The new A4 systems are similarly equipped with more memory (4TB) and B200 GPUs. Google Cloud also previewed newer models that are based on NVIDIA CPUs and AMD CPUs.

AI Hypercomputer also has Tensor processor (TPU) systems based on Google’s own Ironwood TPUs. Ironwood is the 7th generation of Google’s TPU, with heady specs of thousands of teraflops and around 7TBps of bandwidth and expandability to pods of almost 10,000. On top of their own PyTorch or JAX libraries, inference workloads reap benefits.

AI processor capabilities aren’t the only parts of the portfolio—Google hasn’t been asleep on their storage development. Managed Lustre is Hypercomputer’s parallel filesystem, in collaboration with DDN Exabyte, Google’s Managed Lustre provides a POSIX-compliant filesystem with massive capacity and throughput, with sub-millisecond latencies. This is beneficial for training, checkpointing and serving.

Anywhere Cache keeps read-only copies of hot data in the same zone as your workloads. The technology integrates with existing storage buckets and works anywhere within a region.

Rapid Storage is built on top of Google’s Colossus filesystem, providing storage for AI Hypercomputer. The idea is to bring larger scale to typical cloud storage, offering up to 6TB/s access and up to tens of millions of requests per second.

All of these offer new storage capabilities that AI workloads use to perform best.

AI Hypercomputer didn’t forget about networking. Google Cloud provides a cross-region and cross-cloud secure network facilitating AI workloads. This allows different features of the task to run in different locales or even on-premises with a secure, extended network (I have discussed similar features in VMware VLAN). This allows AI tasks like ingest, training and inference to operate in different clusters, networks and locales while remaining on the same network.

While not directly related to AI Hypercomputer, Cloud WAN is one of the a la carte menu items. Cloud WAN provides backhaul network services between different datacenters and colocation facilities via Google interconnects. The idea is that Google Cloud provides subscription services for network connectivity rather than a service provider.

AI Management from Google Cloud

Infrastructure hardware isn’t the extent of AI Hypercomputer. Several new management dashboards are available. The most comprehensive is Cluster Director, which oversees the architecture, workloads, deployment, monitoring and reliability. Cluster Director oversees all aspects of an AI project rollout and safe execution. As with all of the infrastructure options, you can pick and choose the features most meaningful to the use case.

GKE (Google Kubernetes Engine) integrates seamlessly with Cluster Director and the new infrastructure features, meaning GKE can oversee AI-scale workloads. Being able to prop up a complete AI workflow from one point of deployment certainly is attractive.

Finally, the Field Day presentations touched on Storage Intelligence. Storage Intelligence provides mechanisms to enforce security and compliance while automating storage placement and allocation, taking cost into account. It also provides insight into performance, bottlenecks and data transfers.

Not a Hammer, a Toolbox

AI Hypercloud isn’t a single-use tool. AI Infrastructure Field Day wasn’t a recitation of individual features. Each of the capabilities presented can be used individually or in concert. Google Cloud showed up demonstrating they have cloud features for every aspect of an AI workload. They have tools to help manage understanding how to use them and what they’re doing.

Each one of the pieces provides an important and valuable tool for building a cloud or hybrid AI workflow. Taken together, you can build whatever is needed.

Check out the AIIFD2 videos and Google Cloud resources to learn more about the features.