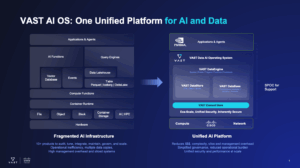

Cisco has tapped VAST Data to extend a reference design for a platform for running artificial intelligence (AI) applications that it developed in collaboration with NVIDIA to make it simpler for IT teams to integrate a storage platform based on a disaggregated and shared-everything (DASE) architecture.

Support for the Cisco Secure AI Factory with NVIDIA platform will make it simpler for IT teams to deploy applications that access data via VAST InsightEngine, an event-driven framework that enables artificial intelligence (AI) applications to access data in seconds.

That capability will be especially crucial for AI agents that, as they respond to multistep reasoning requests in real time, will need to be able to access at levels of scale that most existing IT platforms are not going to be able to support, says John Mao, vice president of alliances for VAST Data.

Available from Cisco, the InsightEngine can now be pre-configured to be deployed in a Cisco AI Pod configured with graphics processing units (GPUs) from NVIDIA that are based on the Cisco Secure AI Factory reference architecture. The overall goal is to make it simpler for IT teams to deploy infrastructure that is optimized for agentic AI applications, notes Mao. “They will need platforms that have battle-tested integrations,” he adds.

In general, most enterprise IT teams are not going to deploy AI infrastructure platforms at scale until they have defined a standard that they can easily support, says Mao. Most existing AI applications have been built and deployed by a dedicated team that includes IT professionals that have experience optimizing IT infrastructure for AI applications. However, as organizations look to deploy AI applications they will need to define a set of standards that makes managing infrastructure at scale simpler, he adds.

Naturally, there is already no shortage of AI infrastructure platforms. A recent survey of IT leaders conducted by the Futurum Group reveals that 69% work for organizations that plan to change or add new AI server vendors in 2025. However, organizations also face significant challenges, with 35% struggling with legacy system integration, 30% citing complex AI technology stacks, and 32% facing IT resource constraints. Additionally, 26% of enterprises report skill shortages, while 27% express concerns over data quality and governance, further delaying AI deployment.

It’s still early days so far as the deployment of AI workloads in production environments is concerned, but it’s clear competition among server vendors will be fierce. Each IT team will need to decide for themselves what type of platform to standardize on but as agentic AI applications become more pervasive many of the previous assumptions made about IT infrastructure requirements will need to change.

In the meantime, IT leaders should be preparing senior leaders for a certain amount of AI sticker shock. In general, it’s going to be more cost-effective to run AI workloads in some type of on-premises IT environment compared to the fees charged for each input and output token charged by a cloud service provider. However, the cost of acquiring the IT infrastructure that is likely to be required will still represent a substantial increase in the total amount of budget dollars allocated to IT.