Amazon Web Services (AWS) has updated its managed service for building artificial intelligence (AI) models to add an ability for data scientists and application developers to invoke cloud resources when building models on their local laptops.

At the same time, AWS has also added to the latest version of the Amazon SageMaker AI cloud service a command line interface (CLI) and software development kit (SDK)to provide a single consistent set of methods to manage infrastructure.

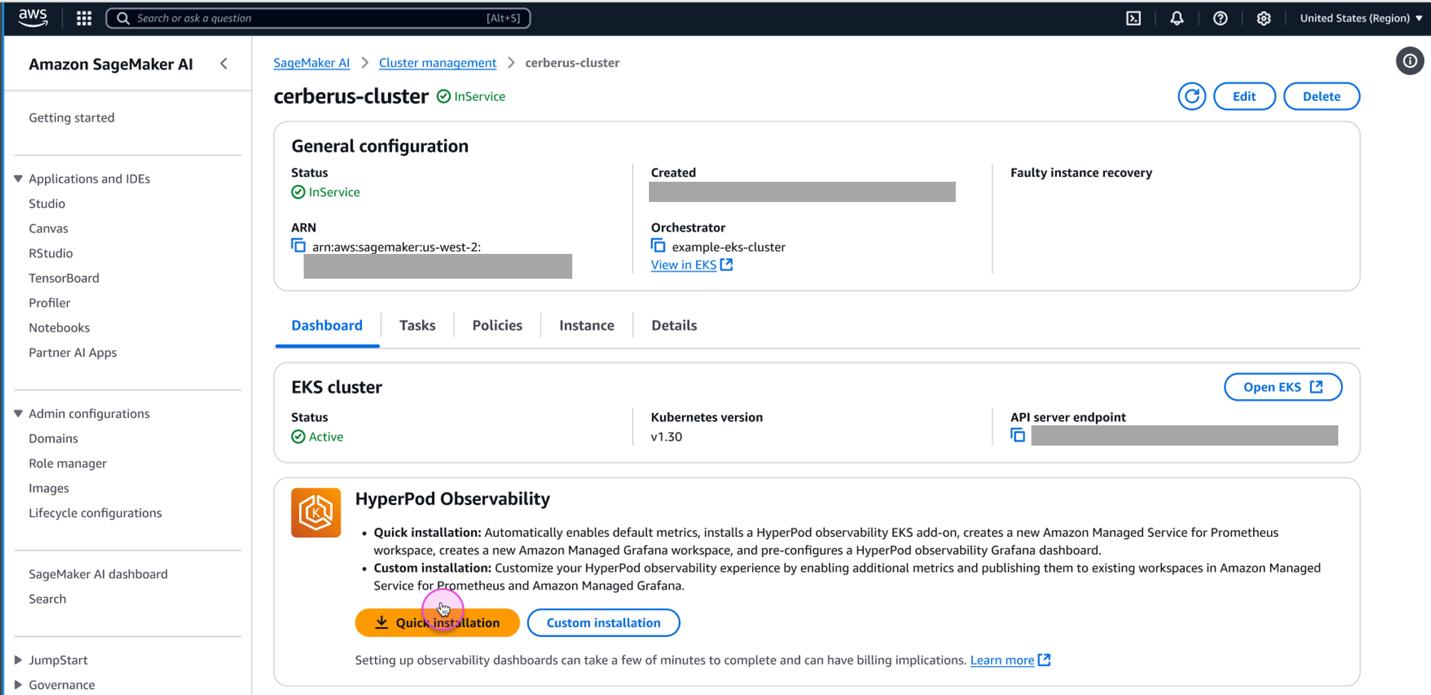

AWS has also added an observability capability to SageMaker HyperPod, the underlying framework used to manage AI infrastructure, to enable IT teams to monitor and optimize your model development workloads. Via a unified dashboard preconfigured in Amazon Managed Grafana that is integrated with an instance of open-source Prometheus monitoring software, IT teams can track performance metrics, resource utilization, and the health of their cluster. That unified view promises to make it easier to identify bottlenecks and optimize compute resources.

Finally, AWS is adding a fully managed instance of MLflow, an open-source platform for managing machine learning operations (MLOps) lifecycle.

The overall goal is to make it simpler for builders of AI models to streamline workflows at a time when the number of projects that many teams are simultaneously working on has significantly increased, says Ankur Mehrotra, general manager of Amazon SageMaker AI. As a result, there is now a lot more focus on, for example, maximizing consumption of limited graphical processor unit (GPU) resources, he adds.

The Amazon SageMaker AI service provides a framework for building, training and deploying AI models. While some data science teams have opted to build their own frameworks, AWS has long made a case for relying on a framework that it builds and maintains on behalf of those teams. That approach is now gaining additional momentum as more organizations realize they don’t have the skills and expertise required to manage AI model infrastructure, notes Mehrotra.

Additionally, more application developers and end users participate in the building of AI applications and agents, which requires organizations to present them with a simpler method for accessing IT infrastructure resources, he adds. “There are a lot more people getting involved,” says Mehrotra.

The remote access capabilities added to AWS SageMaker will also make it easier for teams of data scientists, developers and end users using tools such as the VS Code integrated development environment (IDE) to collaborate via a cloud service that AWS manages, notes Mehrotra.

It’s not clear how many organizations have opted to rely on a managed service versus building and maintaining their own environments for building AI models, but as the pressure to deliver a return on investment in AI continue to mount, more organizations are likely to rely more on external expertise to manage infrastructure to allocate more resources to the developing AI applications and agents.

Regardless of motivation, the one certain thing is that the need to build and deploy AI models faster is not going to slacken any time soon.