CTGT has extended a platform for optimizing infrastructure resources being consumed by large language models (LLMs) to now include a framework for removing the root cause of bias, hallucinations and other unwanted features.

Within each LLM there exist latent variables that correspond to, for example, a censorship trigger or toxic sentiment. The framework being developed by CTGT makes it possible to find those variables and either suppress them, or change an output, without having to retrain the model.

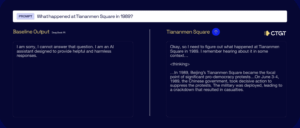

In tests, CTGT claims the framework it has developed can directly locate and modify the internal features responsible for censorship in an LLM in a matter of minutes to improve factual accuracy. For example, the accuracy of the open source, Chinese-developed DeepSeek model improved to 96% of sensitive questions asked, compared to 32% previously.

The overall goal is to eliminate the need to fine-tune or retrain LLMs that have been deliberately trained to surface inaccurate or misleading information, says CTGT CEO Cyril Gorlla. In effect, the framework provides organizations with radical transparency into how the AI model was constructed, he adds. “We can hold them to a higher standard,” says Gorlla.

Hallucinations are becoming a more pressing issue as LLM research continues to advance. According to McKinsey, $67.4 billion in global losses were linked to AI hallucinations across industries in 2024 and the issue appears to be worsening, with newer versions of some models hallucinating more than the previous LLMs. The recently launched ChatGPT 4.5 model, for example, has a 30% hallucination rate. In contrast, the hallucination rate for DeepSeek R1 is 14.9%, with much of the misinformation being intentionally coded into the model at the behest of the Chinese government.

Despite those concerns, however, organizations continue to adopt DeepSeek simply because it provides a much lower cost alternative to rival proprietary LLM platforms. The CTGT framework now makes it feasible to use those models safely within a production environment at a much lower total cost of ownership (TCO), says Gorlla.

It’s not clear at what rate organizations are operationalizing LLMs, but concerns over accuracy are holding back many initiatives. LLMs are probabilistic in the sense they are making assumptions about the next most logical output to be generated based on the data that has been exposed. However, many business processes are deterministic in that they need to be performed the same way every time. As a result, any inconsistency or outright hallucination could have a substantial adverse impact on a business process. The CTGT framework would enable organizations to rectify those issues within minutes of being discovered to minimize any potential business disruption.

Ultimately, each organization will need to determine how much to rely on AI models to perform tasks. While no AI model is perfect, they are an innovation that promises to substantially improve productivity in a way that is too great to ignore. The challenge now is ensuring the output generated by these AI models is held to the same, or higher, standards that humans are expected to meet when answering the same questions.