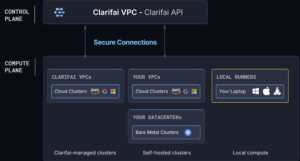

Clarifai today added an ability to automatically extend artificial intelligence (AI) models running on local machines to external IT environments using an AI Runners capability that is being added to the platform.

At the same time, Clarifai is making available a Developer Plan at a promotional price of $1 per month for the first year, which will then increase to $10 per month as part of an effort to make the Clarifai platform more accessible.

The AI Runners capability further advances that goal by now making it possible to create a hybrid IT environment for building AI models, says Clarifai CEO Matt Zeiler.

While most data scientists and developers prefer to work with AI models running on their local machine, it’s generally only a matter of time before they start to run out of memory and storage resources, notes Zeiler. The AI Runners capability makes it possible to extend the reach of a local environment by invoking a set of application programming interfaces (APIs) that expose additional infrastructure resources on demand.

Clarifai has been making a case for an integrated platform for building and deploying AI models using a set of reusable best machine learning operations (MLOps) practices that support multiple toolkits and processors. That approach, for example, enables organizations to make more efficient use of graphical processing units (GPUs) that can be more easily shared across multiple projects, notes Zeiler. Alternatively, the Clarifai platform also supports multiple other classes of processors that might be used to build and deploy AI models at a lower cost, he adds.

Designed to be deployed in any of the major cloud computing environments or an on-premises IT environment, Clarifai also makes available a Compute Orchestration engine that makes it simpler to automatically scale infrastructure resources, including spot instances of cloud services, as needed. “The platform spans everything from prototype to production,” says Zeiler.

Historically, many AI models have been constructed by a data science team that included infrastructure specialists. However, organizations are now trying to find ways to cost-effectively support multiple initiatives using a common pool of IT infrastructure resources. In fact, a recent Futurum Research survey noted that organizations are increasingly focused on measurable outcomes, with 43% emphasizing deployment and support capabilities followed by projected time to value (42%), model development and customization (36%) and the technology stack (33%) being used.

Each organization will need to determine which AI projects to advance based on the resources made available, but there is a clear need to focus more on infrastructure optimization when building and deploying AI models at scale. There is, after all, not much point in hiring expensive AI expertise if the organization can’t afford to make the resources required to build and deploy AI models accessible.

Hopefully, there will come a day when cost concerns are reduced as constructing and deploying AI models becomes more efficient. In the meantime, however, making the most of all the infrastructure resources available inside and outside the cloud remains a significant challenge.