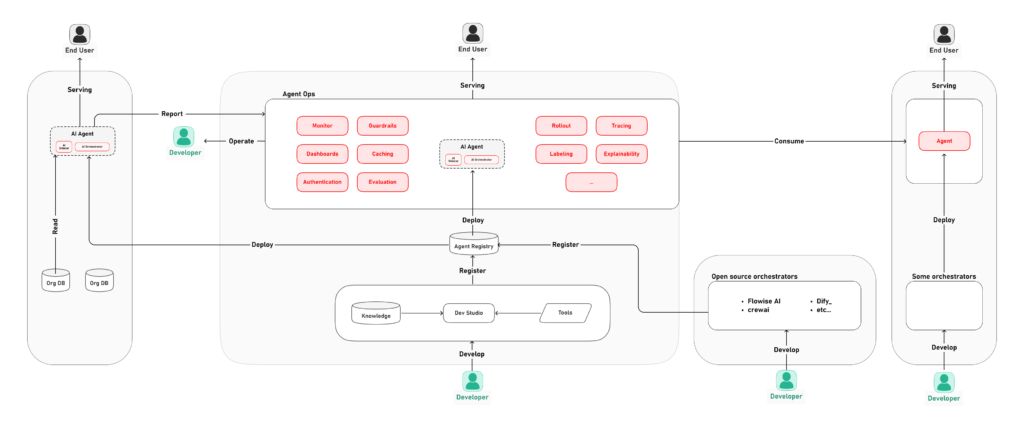

SUPERWISE today unveiled a governance platform for artificial intelligence (AI) agents created using any mix of proprietary and open-source large language models (LLMs).

This AgentOps extension to the company’s governance platform will enable organizations to prevent AI agents from performing tasks beyond the permissions that have been granted, says SUPERWISE CEO Russ Blattner.

Initially, AgentOps applies governance policies to AI agents created using Flowise, a development framework from SUPERWISE, but will soon be extended to other frameworks, including Dify, CrewAI, Langflow and N8n, he adds.

It’s still early days so far as adoption of AI agents is concerned, but the more data that an AI agent is exposed to, the greater the tendency there is to increase the scope of the tasks it will try to perform unless a robust set of guardrails has been put in place. “The key to managing AI agents is to keep them from going rogue,” says Blattner.

Less clear is which teams inside organizations will assume responsibility for AI agent governance. In theory, the teams that build and deploy AI agents will have defined a set of guardrails. However, there are in larger organizations dedicated teams for managing governance, risk and compliance (GRC) that are ultimately responsible for ensuring the integrity of business processes. That’s critical because before too long AI agents will be pervasively deployed across enterprise IT environments. However, it’s all but certain that auditors will be paying close attention to how these AI agents might violate one or more compliance mandates, which could result in significant fines being levied, especially in heavily regulated industries.

Despite these concerns, the number of AI agents that need to be governed is only going to increase exponentially. In fact, a recent Futurum Group report projects that AI agents over the next three years will drive $6 trillion worth of economic value by 2028. The challenge is making sure that value is realized in a way that, while reducing friction, doesn’t result in AI agents inadvertently making mistakes at levels of scale that are difficult to rectify.

Each organization will need to determine to what degree it will trust any given autonomous AI agent to complete a task. In theory, the more an AI agent successfully completes a task, the more likely it becomes that it will be trusted. However, therein lies the issue because the more data an AI agent is exposed to, the more likely it becomes that it will begin to unpredictably hallucinate in ways that disrupt workflows. No one, of course, can be sure when an AI might go rogue, but if history is any guide, it will occur at the most inconvenient time possible.

Eventually, there will be no shortage of GRC platforms for AI agents. Less clear is to what degree AI agents will require a dedicated GRC platform versus being treated as another entity to be managed alongside the workflows already being governed by existing platforms. In the meantime, however, the time to decide how AI agents will be governed should be before thousands of them have already been deployed.